Khai Nguyen

Hi! I’m Khai, a final-year Ph.D. candidate at Department of Statistics and Data Sciences, University of Texas at Austin. I am fortunate to be advised by Professor Nhat Ho and Professor Peter Müller, and to be associated with Institute for Foundations of Machine Learning (IFML). I graduated from Hanoi University of Science and Technology with a Computer Science Bachelor’s degree. Before joining UT Austin, I was an AI Research Resident at VinAI Research (acquired by Qualcomm AI Research) under the supervision of Dr. Hung Bui.

I’m on the academic job market (postdoc or assistant professor)! Please feel free to contact me if interested in my research!

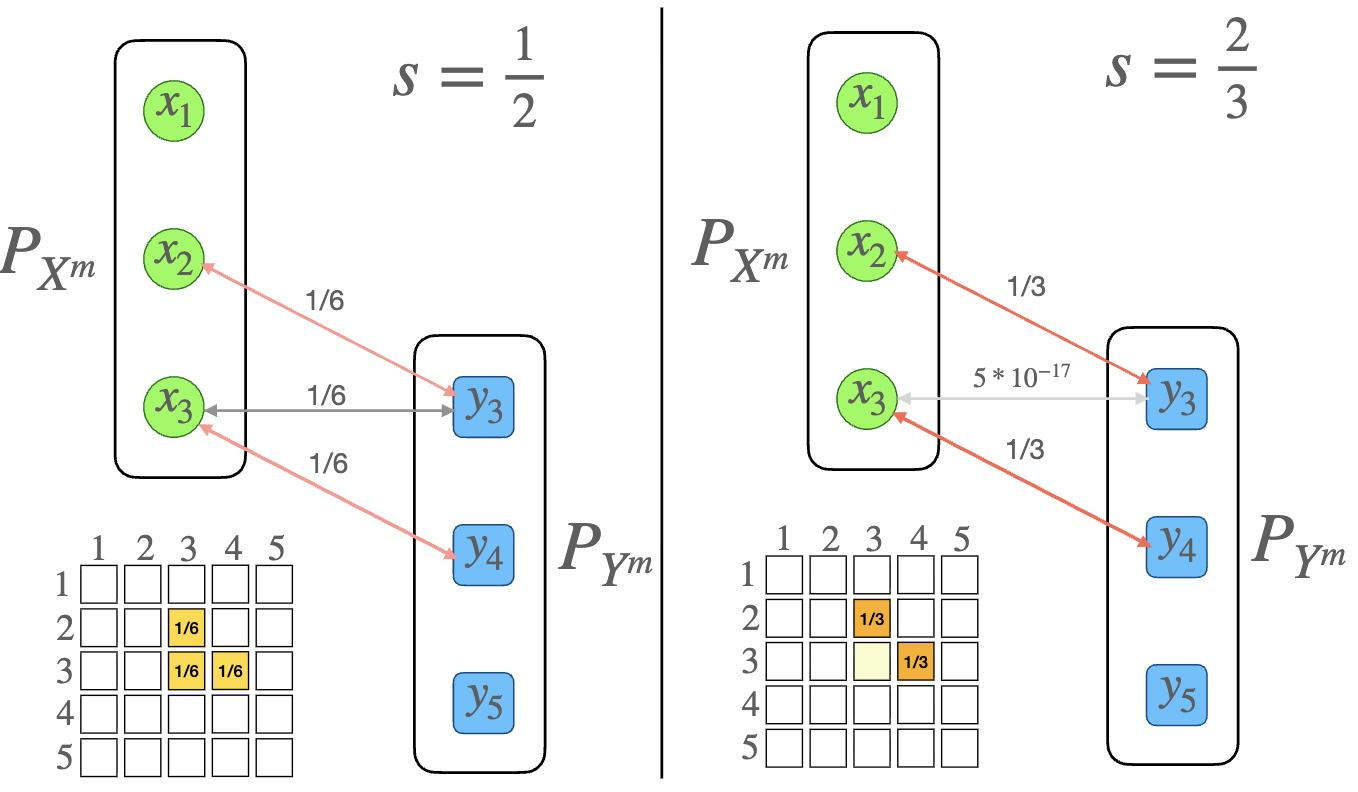

(This video is created by using my proposed energy-based sliced Wasserstein distance.)

Research: My research focuses on both fundamental problems and applied problems in statistics, statistical machine learning, and deep learning.

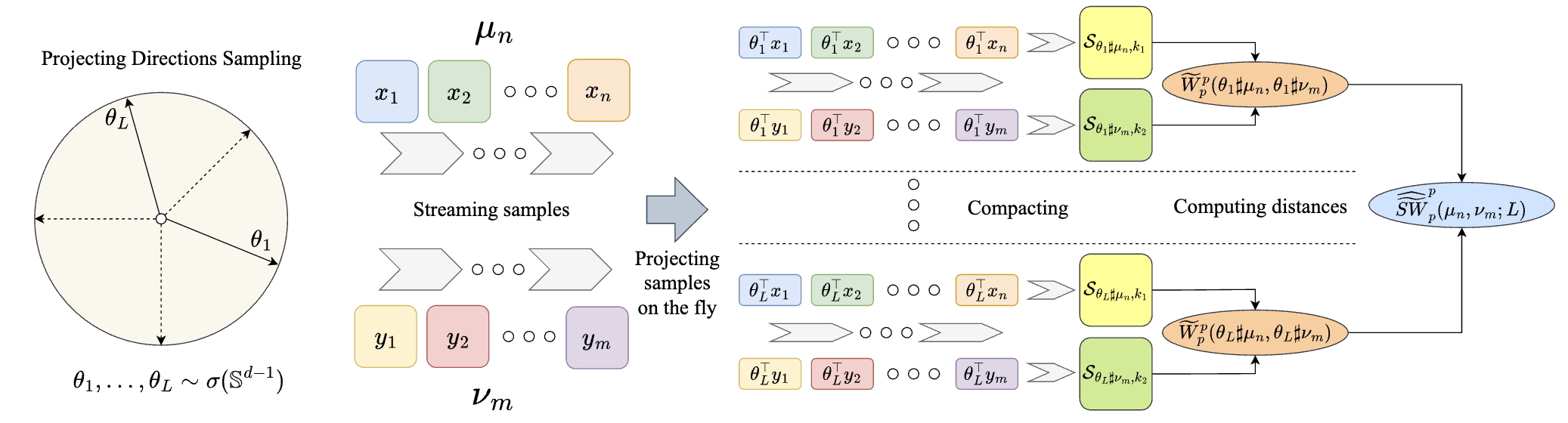

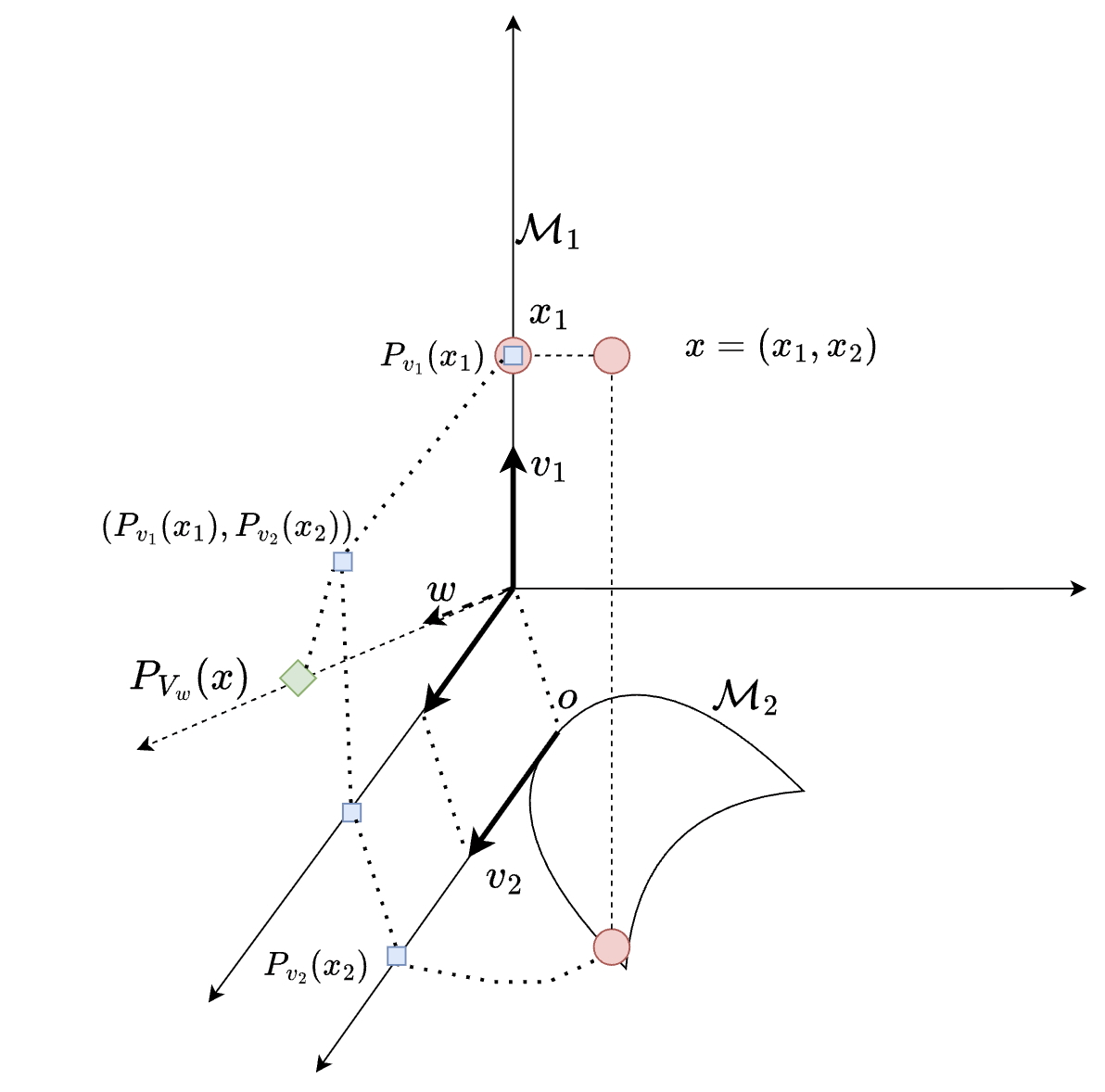

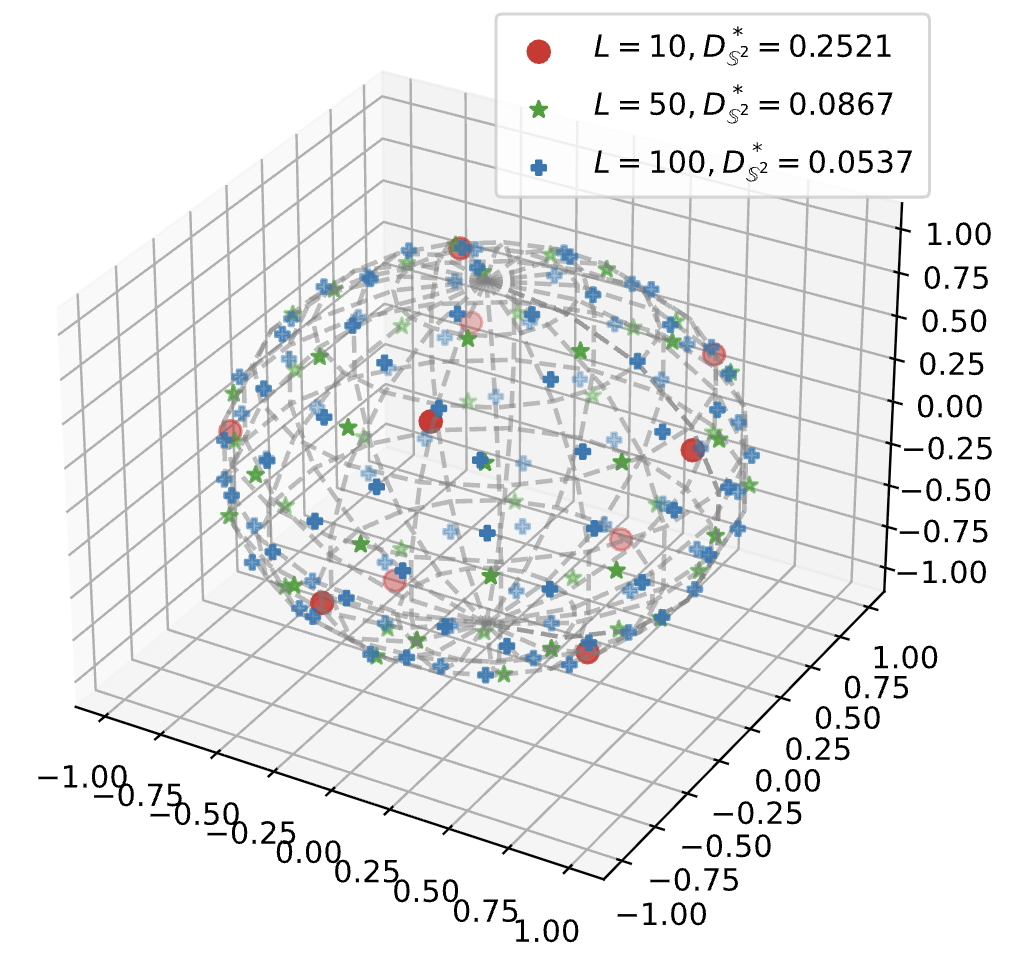

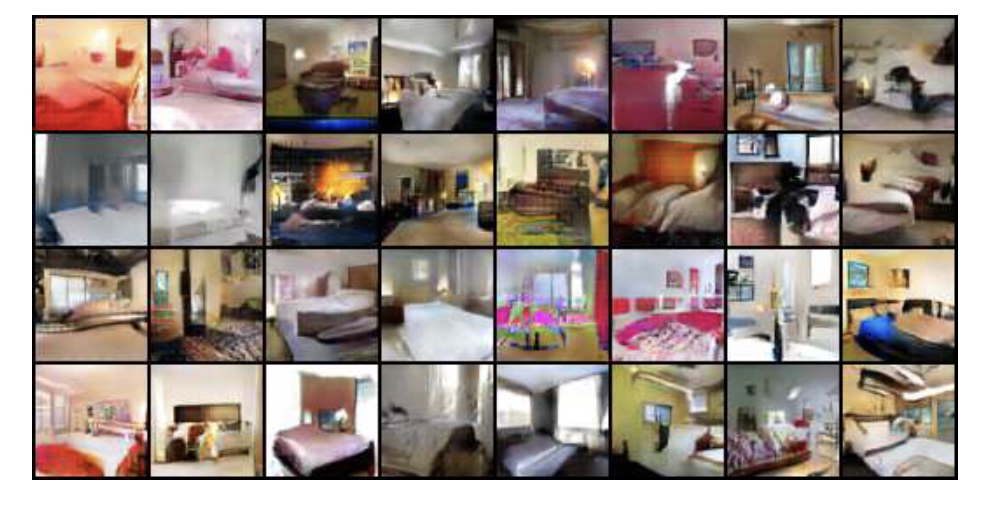

1. Computational Optimal Transport. My research makes Optimal Transport scalable for statistical inference (with low time complexity, space complexity, and sample complexity) through a random projection approach known as sliced optimal transport (SOT). My work focuses on four key sub-domains of SOT: Monte Carlo methods, generalized Radon transform, weighted Radon transform, and nonparametric estimation. In addition, I contribute to variational problems in SOT, such as the sliced Wasserstein barycenter and sliced Wasserstein kernels. Finally, I broaden the applications of SOT across machine learning, statistics, and computer graphics and vision. I wrote a monograph on SOT, providing a synthesized introduction to the topic.

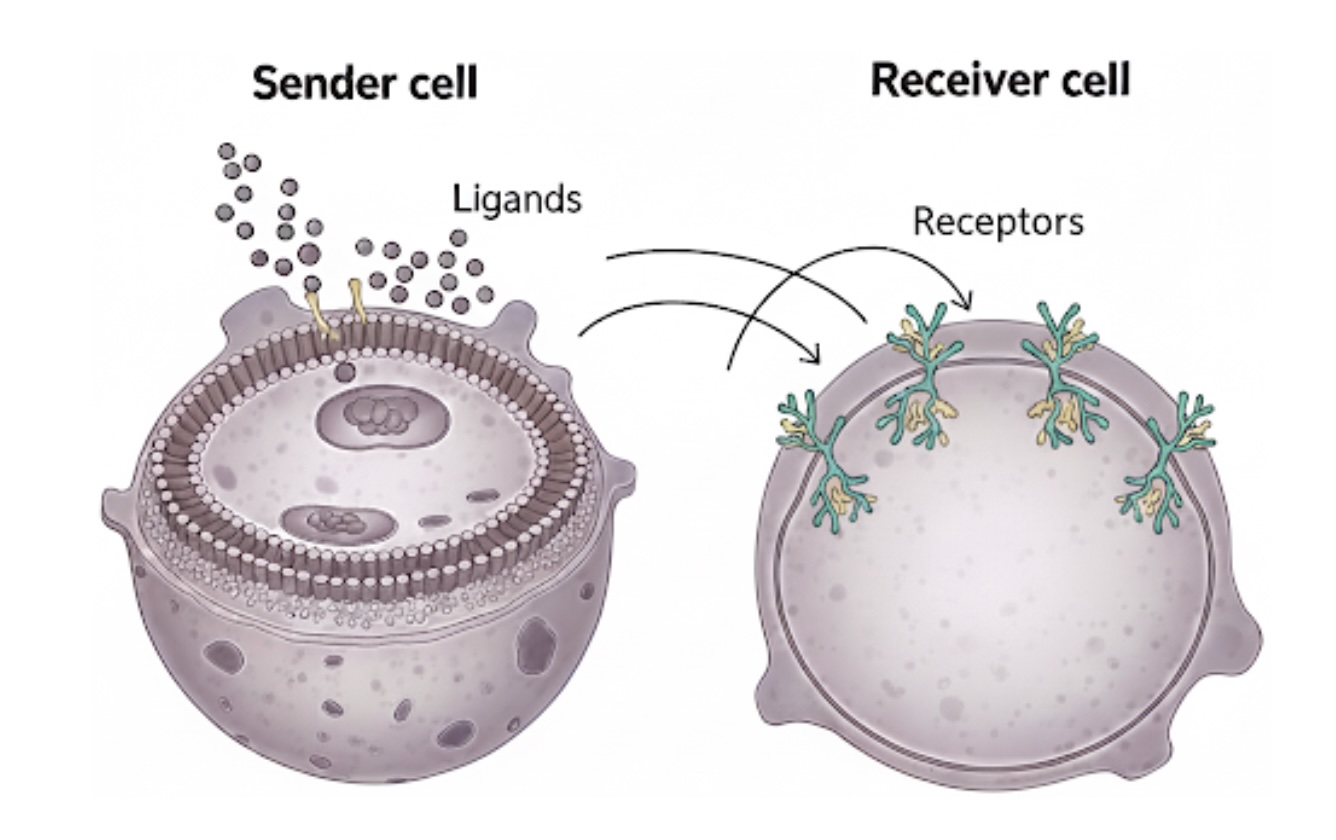

2. Statistical Inference and Decision Making. I formulate new inference and decision problems, such as Bayesian multivariate density-density regression and random partition summarization with a decision-theoretic approach. I also leverage OT and SOT to improve the scalability and accuracy of statistical estimation in machine learning applications, including generative modeling, representation learning, and domain adaptation.

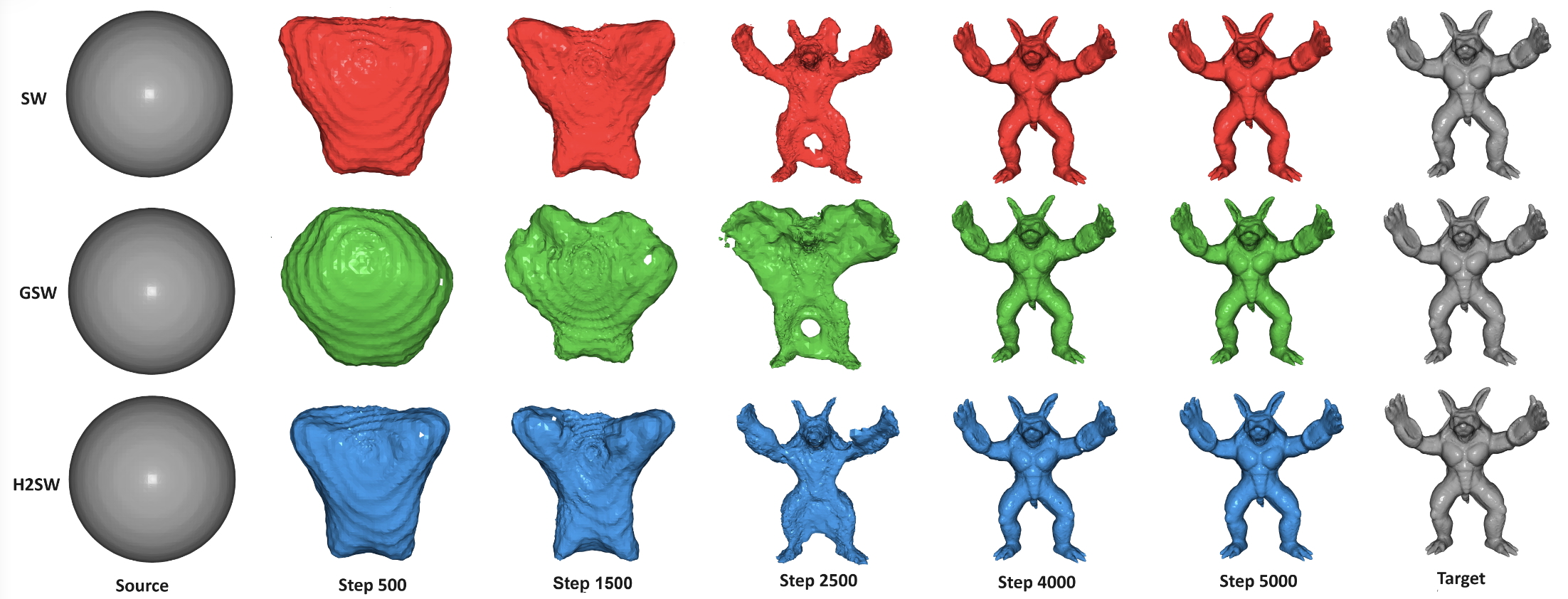

3. Geometric Data Processing. Geometric data, such as 3D point clouds and meshes, can be naturally represented as distributions over geometric spaces. I develop scalable statistical models for deforming, reconstructing, and matching 3D shapes, enabling applications in medical imaging (e.g., cortical surface reconstruction) as well as computer graphics and vision.

News

| Nov 03, 2025 | My monograph An Introduction to Sliced Optimal Transport is published at Foundations and Trends® in Computer Graphics and Vision. |

|---|---|

| Sep 18, 2025 | 1 paper Unbiased Sliced Wasserstein Kernels for High-Quality Audio Captioning is accepted at NeurIPS 2025. |

| May 01, 2025 | 1 paper Lightspeed Geometric Dataset Distance via Sliced Optimal Transport is accepted at ICML 2025. |

| Mar 07, 2025 | My proposal Summarizing Bayesian Nonparametric Mixture Posterior - Sliced Optimal Transport Metrics for Gaussian Mixtures is accepted at The Bayesian Young Statisticians Meeting 2025 as a talk in a session with discussion. |

| Feb 27, 2025 | I’m thrilled to be awarded a travel grant for International Conference on Bayesian Nonparametrics (BNP 14). |

| Feb 26, 2025 | I’m thrilled to be awarded a UT Austin Outstanding Graduate Research Fellowship which is a merit-based fellowship awarded based on academic achievements, research accomplishments, and potential for future contributions. |

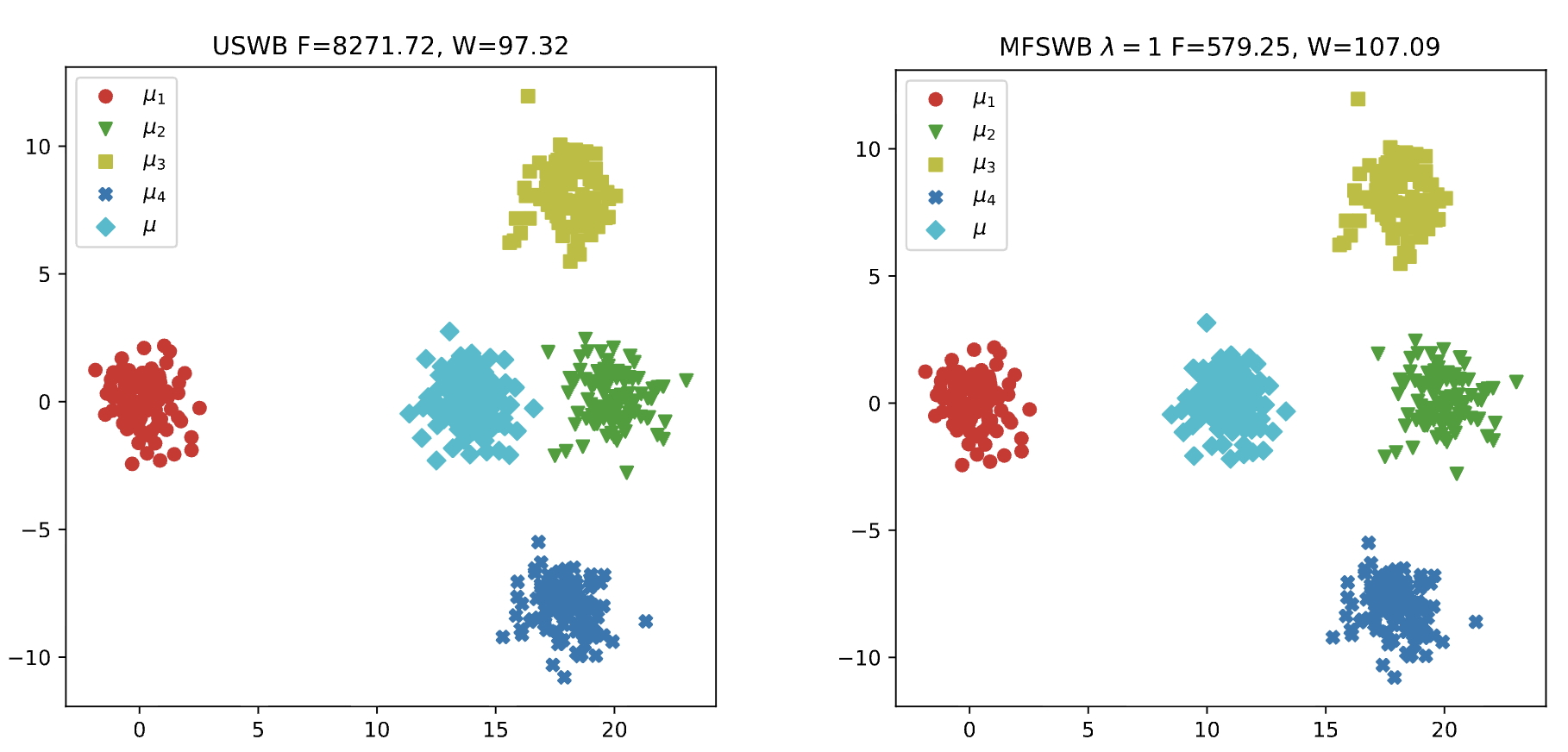

| Feb 11, 2025 | Our paper Towards Marginal Fairness Sliced Wasserstein Barycenter is selected as a spotlight at ICLR 2025. |

| Jan 26, 2025 | My proposal Summarizing Bayesian Nonparametric Mixture Posterior - Sliced Optimal Transport Metrics for Gaussian Mixtures is accepted at 14th International Conference on Bayesian Nonparametrics as a contributed talk. |

| Jan 22, 2025 | 1 paper Towards Marginal Fairness Sliced Wasserstein Barycenter is accepted at ICLR 2025. |

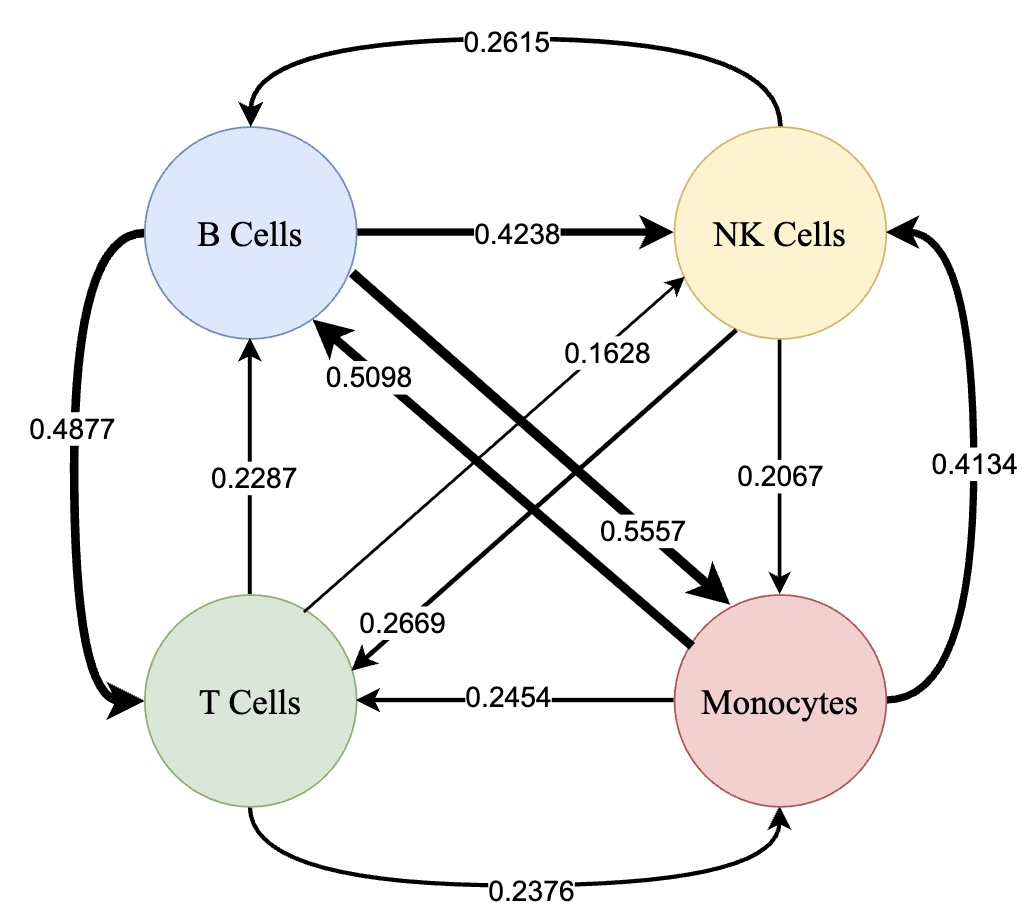

| Sep 26, 2024 | 1 paper Hierarchical Hybrid Sliced Wasserstein: A Scalable Metric for Heterogeneous Joint Distributions is accepted at NeurIPS 2024. |

| May 01, 2024 | 1 paper Sliced Wasserstein with Random-Path Projecting Directions is accepted at ICML 2024. |

| Feb 27, 2024 | 1 paper Integrating Efficient Optimal Transport and Functional Maps For Unsupervised Shape Correspondence Learning is accepted at CVPR 2024. |

| Jan 19, 2024 | 2 papers Towards Convergence Rates for Parameter Estimation in Gaussian-gated Mixture of Experts, On Parameter Estimation in Deviated Gaussian Mixture of Experts are accepted at AISTATS 2024. |

| Jan 16, 2024 | 4 papers Quasi-Monte Carlo for 3D Sliced Wasserstein - Spotlight Presentation, Sliced Wasserstein Estimation with Control Variates, Diffeomorphic Deformation via Sliced Wasserstein Distance Optimization for Cortical Surface Reconstruction, and Revisiting Deep Audio-Text Retrieval Through the Lens of Transportation are accepted at ICLR 2024. |

| Sep 21, 2023 | 4 papers Energy-Based Sliced Wasserstein Distance, Markovian sliced Wasserstein distances: Beyond independent projections, Designing robust Transformers using robust kernel density estimation, and Minimax optimal rate for parameter estimation in multivariate deviated models are accepted at NeurIPS 2023. |

| Apr 24, 2023 | 1 paper Self-Attention Amortized Distributional Projection Optimization for Sliced Wasserstein Point-Cloud Reconstruction is accepted at ICML 2023. |

| Jan 20, 2023 | 1 paper Hierarchical Sliced Wasserstein Distance is accepted at ICLR 2023. |

| Sep 14, 2022 | 4 papers Revisiting Sliced Wasserstein on Images: From Vectorization to Convolution, Amortized Projection Optimization for Sliced Wasserstein Generative Models, Improving Transformer with an Admixture of Attention Heads , and FourierFormer: Transformer Meets Generalized Fourier Integral Theorem are accepted at NeurIPS 2022. |

| Apr 24, 2022 | 2 papers Improving Mini-batch Optimal Transport via Partial Transportation and On Transportation of Mini-batches: A Hierarchical Approach are accepted at ICML 2022. |

| Jan 24, 2021 | 2 papers Distributional Sliced-Wasserstein and Applications to Generative Modeling - Spotlight Presentation and Improving Relational Regularized Autoencoders with Spherical Sliced Fused Gromov Wasserstein are accepted at ICLR 2021. |